Best AI Detector | Free & Premium Tools Compared

AI detectors are tools designed to detect when a text was generated by an AI writing tool like ChatGPT. AI content may look convincingly human in some cases, but these tools aim to provide a way of checking for it. We’ve investigated just how accurate they really are.

To do so, we used a selection of testing texts including fully ChatGPT-generated texts, mixed AI-and-human texts, fully human texts, and texts modified by paraphrasing tools. We ran all these texts through 10 different AI detectors to see how accurately each tool labeled them.

Our research indicates that if you’re willing to pay, the most accurate AI detector available right now is Winston AI, which identified 84% of our texts correctly. If you don’t want to pay, Sapling is the best choice: it’s totally free and has 68% accuracy, the highest score among free tools.

| Tool | Accuracy | False positives | Free? | Star rating |

|---|---|---|---|---|

| 1. Winston AI | 84% | 0 | 4.2 | |

| 2. Originality.AI | 76% | 1 | 3.7 | |

| 3. Sapling | 68% | 0 | 3.4 | |

| 4. CopyLeaks | 66% | 0 | 3.3 | |

| 5. ZeroGPT | 64% | 1 | 3.1 | |

| 6. GPT-2 Output Detector | 58% | 0 | 2.9 | |

| 7. CrossPlag | 58% | 0 | 2.9 | |

| 8. GPTZero | 52% | 1 | 2.5 | |

| 9. Writer | 38% | 0 | 1.9 | |

| 10. AI Text Classifier (OpenAI) | 38% | 1 | 1.8 |

General conclusions

In general, our research showed that because of how AI detectors work, they can never provide 100% accuracy. The companies behind some tools make strong claims about their reliability, but those claims are not supported by our testing. Only the premium tools we tested surpassed 70% accuracy; the best free tool, Sapling, scored 68%.

We also observed some other interesting trends:

- False positives (human-written texts flagged as AI) do happen. Four of the 10 tools we tested had a false positive, including one of the overall best tools, Originality.

- GPT-4 texts were generally harder to detect than GPT-3.5 texts. However, most tools do still detect GPT-4 texts in some cases.

- AI texts that have been combined with human text or paraphrased are hard to detect. Winston AI does best with them but still finds only 60%.

- AI detectors generally don’t detect the use of paraphrasing tools on human-written text. Of the tools we tested, only Originality detected this in more than half of cases (60%).

- AI texts on specialist topics seem slightly harder to detect than those on general topics (57% vs. 67% accuracy).

- While most detectors show a percentage, they are often binary in their judgments—showing close to 100% or close to 0% in most cases, even when a text is about half-and-half.

Overall, AI detectors shouldn’t be treated as absolute proof that a text is AI-generated, but they can provide an indication in combination with other evidence. Educators using these tools should bear in mind that they are relatively easy to get around and can sometimes produce false positives.

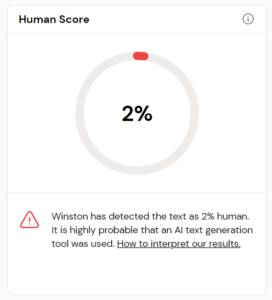

1. Winston AI

- The most accurate detection out of all the tools we tested

- No false positives

- Detects highest proportion of edited AI texts and 100% of GPT-4 texts

- Provides a percentage

- Highlights text to indicate AI content

- Costs $18 a month (after a free trial of 2,000 words or one week)

- Requires sign-up to use

- Completing a scan takes a few clicks

- Doesn’t detect use of paraphrasing tools

Winston AI stood out as the best tool we tested in terms of accuracy. It had the highest overall accuracy score at 84%, did not incorrectly label any human text as AI-generated, and detected every GPT-4 text. Additionally, it was the best tool for detecting AI content that was combined with human text or run through a paraphrasing tool (although it still caught only 60% of these texts).

The information provided is clear: a percentage and colored highlights on parts of the text that the tool considers to be AI-generated. The interface could be better, though; it requires you to click through multiple pages to complete a scan.

The main downside of the tool is its price. While most AI detectors we tested are free, Winston AI costs $18 a month, which allows you to scan 80,000 words each month. A weeklong free trial is available, but it’s capped at only 2,000 words (total, not per scan).

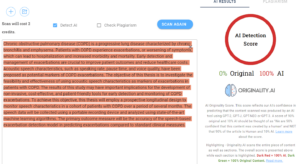

2. Originality.AI

- High accuracy

- Detects all GPT-4 texts

- Sometimes detects use of paraphrasing tools

- Gives a percentage

- Highlights text to indicate likelihood of AI content

- Costs at least $20

- Requires sign-up to use

- One false positive

- Relationship between percentage and highlighting is not very clear

Originality.AI, another premium tool, performed almost as well as Winston AI, but with slightly lower overall accuracy (76%) and one false positive. However, it was the only tool in our testing to detect the use of paraphrasing tools more than half the time (60%); if you’re interested in this kind of detection, Originality is likely the best choice.

Originality gives a percentage likelihood that a text is AI-generated and highlights text in various colors to label it as AI or human. The highlighting doesn’t always have a clear relationship to the percentage shown, though. It’s not fully clear how the user should interpret the two pieces of information.

It’s worth noting that Originality’s pricing is fairly generous at $0.01 per 100 words, but there is a minimum spend of $20. Still, for that price, you get 200,000 words, whereas Winston AI charges $18 for 80,000. It’s just unfortunate that Originality’s accuracy is lower.

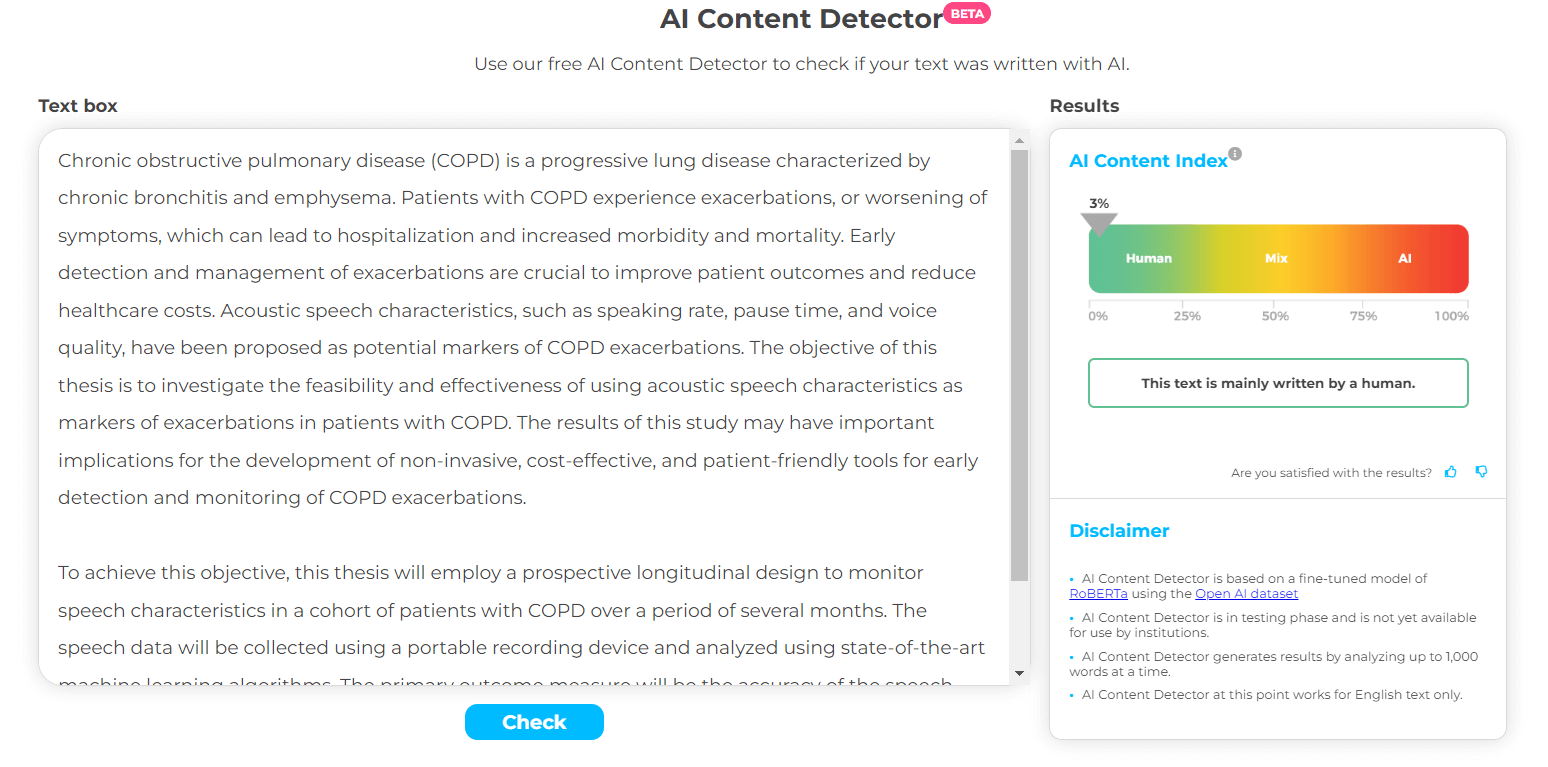

3. Sapling

- Free

- The most accurate free tool

- No false positives

- Gives a percentage

- No sign-up needed—just paste in text

- Not clear how to interpret the two different kinds of highlighting

Sapling stood out as the most accurate free tool we tested, with an overall score of 68%. It detected all GPT-3.5 texts and over half of GPT-4 texts (60%). It also had no false positives and did better than most tools at correctly highlighting the AI content in mixed AI-and-human texts.

Sapling is very quick and straightforward to use. There’s no sign-up required; you just paste in the text you want to check and get an instant result.

You get a percentage score followed by two highlighted versions of the text. It’s not really clear how the user is meant to interpret these two different highlighted texts, since they give different information. The first one is the one that matches the percentage given most closely.

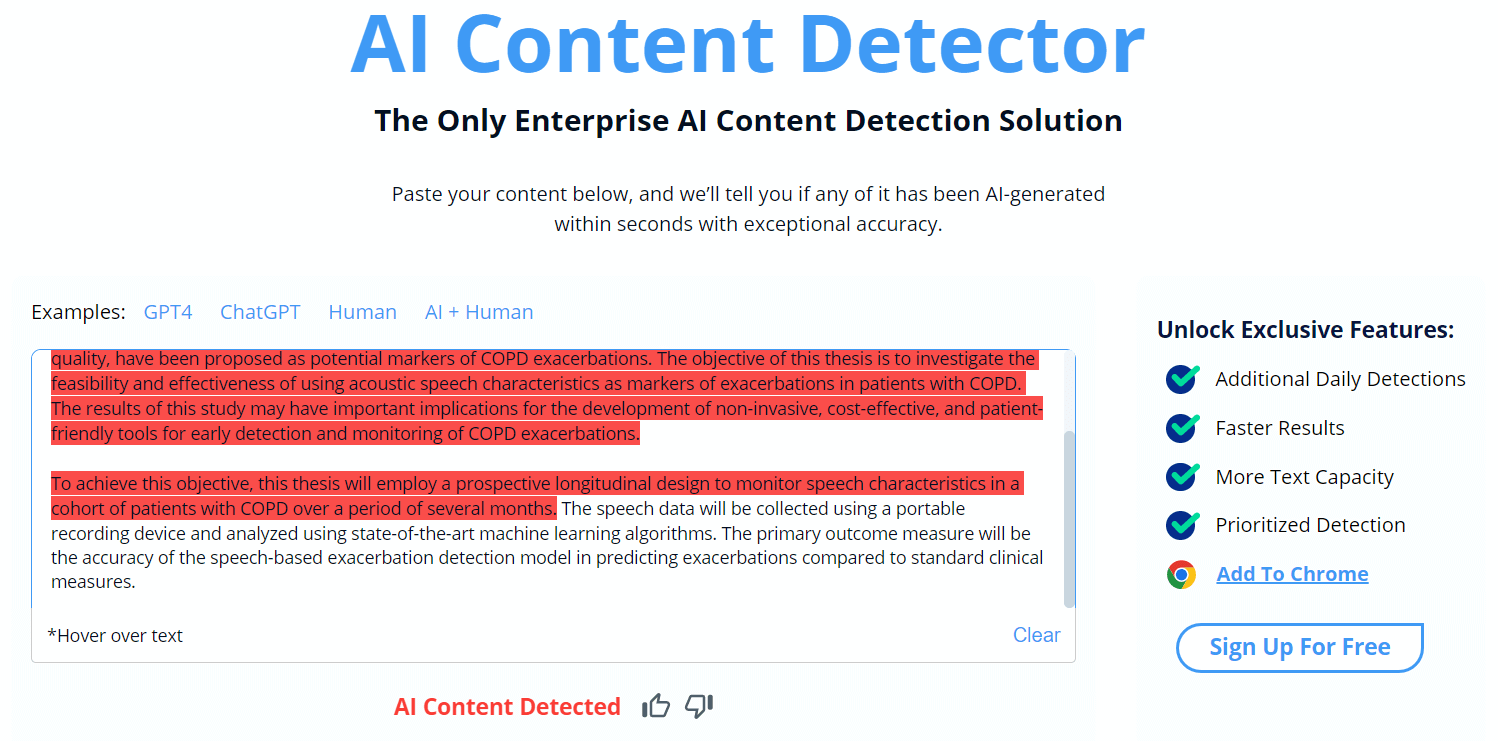

4. CopyLeaks

- Free

- Accurate for a free tool

- No false positives

- No sign-up required—just paste in text

- Doesn’t give an overall percentage

- Information provided is not clearly explained

- Limits on number of daily checks (even if you sign up)

CopyLeaks is one of the better free tools in terms of accuracy, at 66% (though this is much lower than the 99% claimed on the site). Like Sapling, it found all GPT-3.5 texts and over half of the GPT-4 texts, and it had no false positives.

However, CopyLeaks has some unfortunate downsides in terms of usability. There’s a limit on daily checks, which can be increased (but not removed) by signing up for a free account. Additionally, the results shown are very unclear compared to those of other tools.

Instead of an overall percentage, you just get a highlighted text. When you mouse over part of the text, a percentage is shown, but this is not the overall AI content percentage. It seems likely that this percentage represents the tool’s confidence in its label for that piece of text, but this is a guess—it’s not explained anywhere in the interface. As such, the tool is not user-friendly.

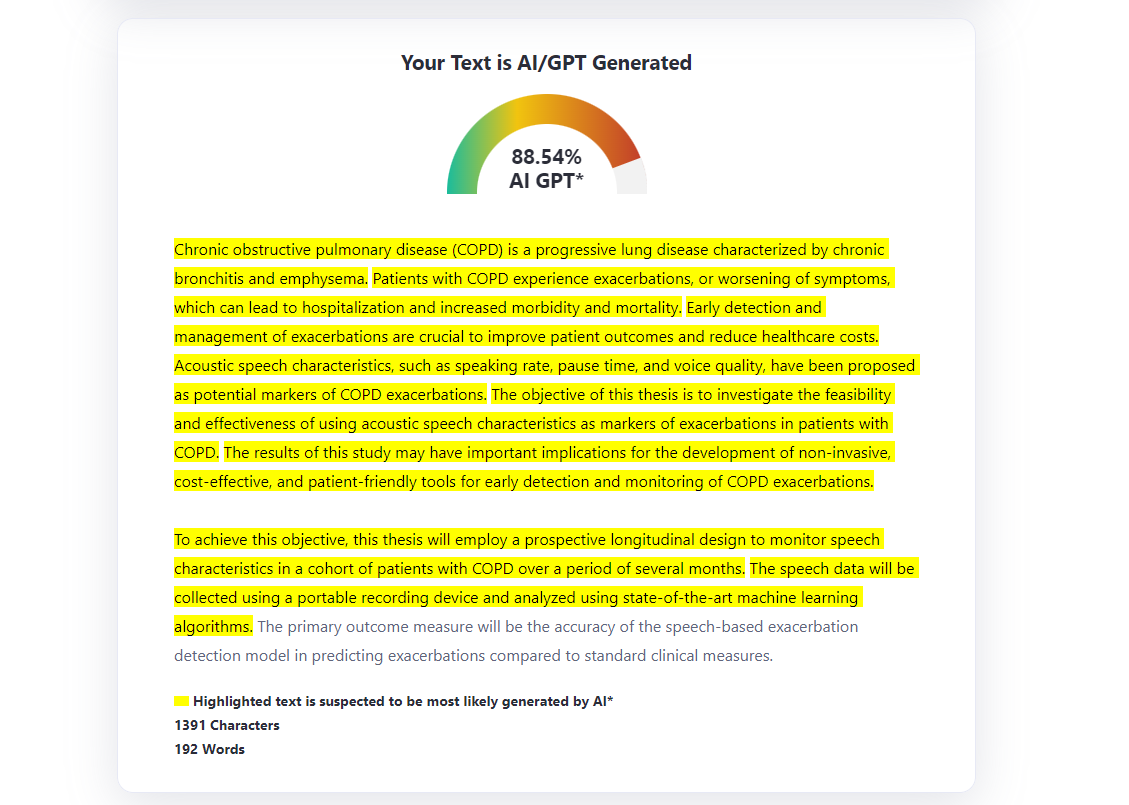

5. ZeroGPT

- Free

- Accurate for a free tool

- Gives a percentage, highlighting, and text assessment

- No sign-up required—just paste in text

- Not always clear how text assessment relates to percentage

- One false positive

- Missed one GPT-3.5 text

ZeroGPT performed quite well for a free tool, with 64% accuracy overall. It identified four of the five GPT-3.5 texts and three of the five GPT-4 texts. It performed particularly well at finding texts that consisted of paraphrased AI content or mixed AI-and-human content, finding 50% of these texts.

We found the tool straightforward to use. You can just paste in text (or upload a file) to test it immediately, and the results show a text label such as “Your Text is AI/GPT Generated,” a percentage, and text highlighting indicating which parts of the text are most likely AI.

We did find it hard to understand exactly how the text label related to the percentage, since very different percentages would sometimes show the same label, or vice versa. Additionally, the tool did have one false positive, identifying a human-written text as AI.

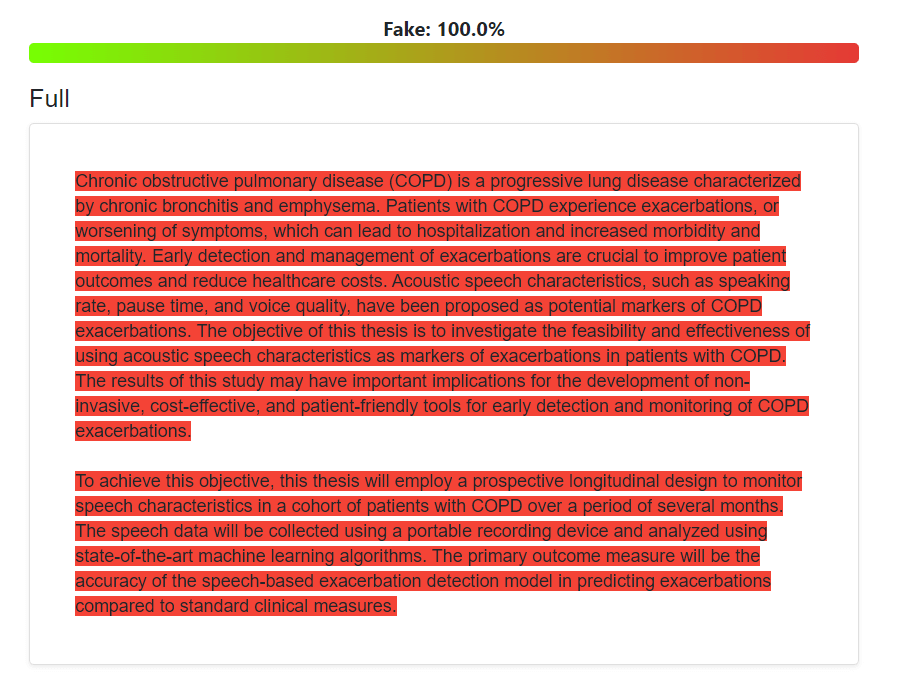

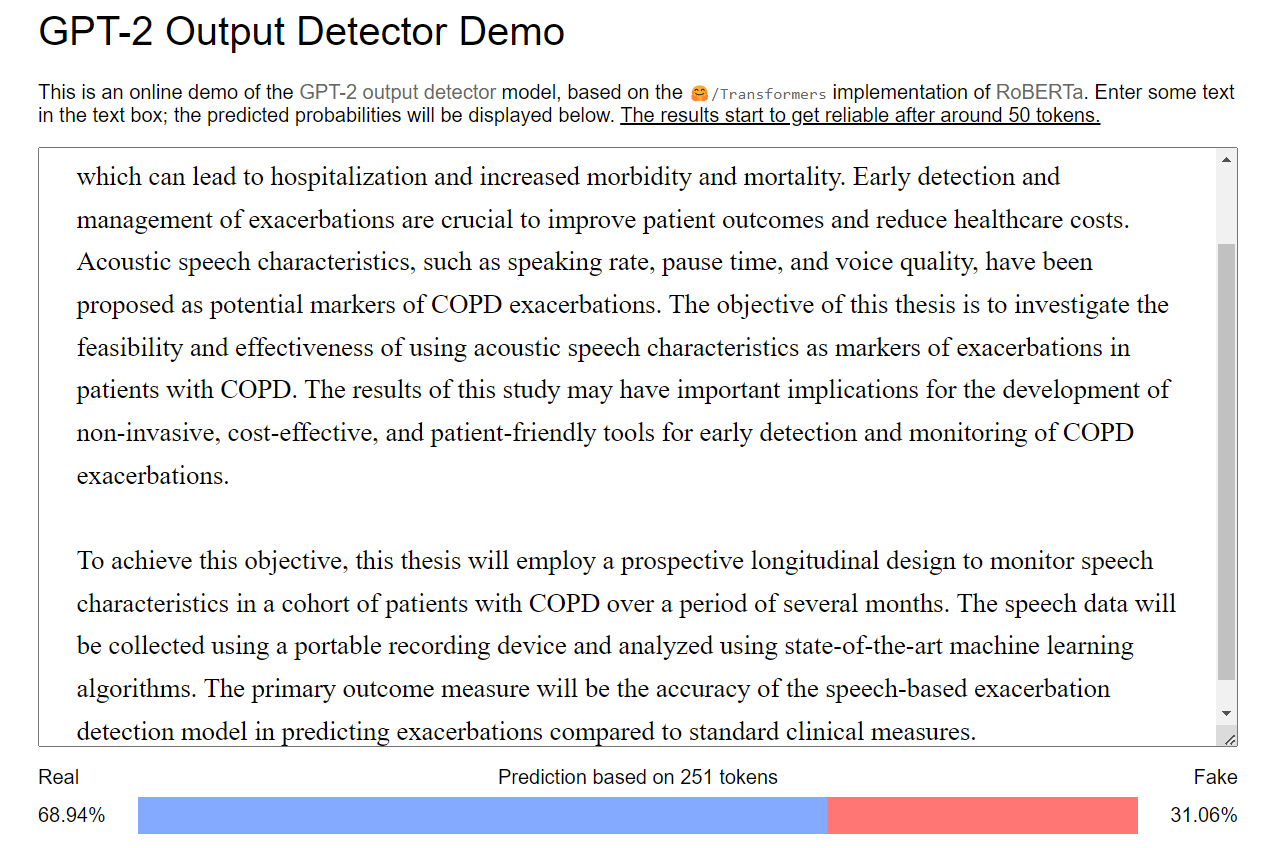

6. GPT-2 Output Detector

- Free

- No false positives

- No sign-up required—just paste in text

- Provides a percentage

- Below-average accuracy

- No text highlighting

- Missed one GPT-3.5 text

GPT-2 Output Detector performed slightly below average in our testing, at 58%. It caught the same number of GPT-4 texts as Sapling and CopyLeaks, but it missed one of the GPT-3.5 texts. It had no false positives but was otherwise not very impressive in accuracy terms.

The interface provided is simplistic but clear, and there’s no sign-up required. You simply paste in your text and get percentages representing how much text is “real” and how much “fake.” There’s no text highlighting to indicate which is which, though.

GPT-2 Output Detector is an OK option, but there’s no real reason to use it instead of the more accurate and equally accessible Sapling.

7. CrossPlag

- Free

- No false positives

- No sign-up required—just paste in text

- Provides a percentage

- Below-average accuracy

- No text highlighting

- Missed one GPT-3.5 text

CrossPlag performs at the same level of accuracy as GPT-2 Output Detector: 58% (though they got slightly different things wrong, suggesting they’re not using identical technology). Like that tool, it had no false positives and got one of the GPT-3.5 texts wrong.

The information provided is also very similar: just a percentage, without any text highlighting or other information. CrossPlag presents the information in a slightly more attractive interface, but there’s no real difference in terms of content.

Because of this, there’s very little distinguishing these two tools. They’re both middling options for AI detection that are outperformed by other free tools like Sapling.

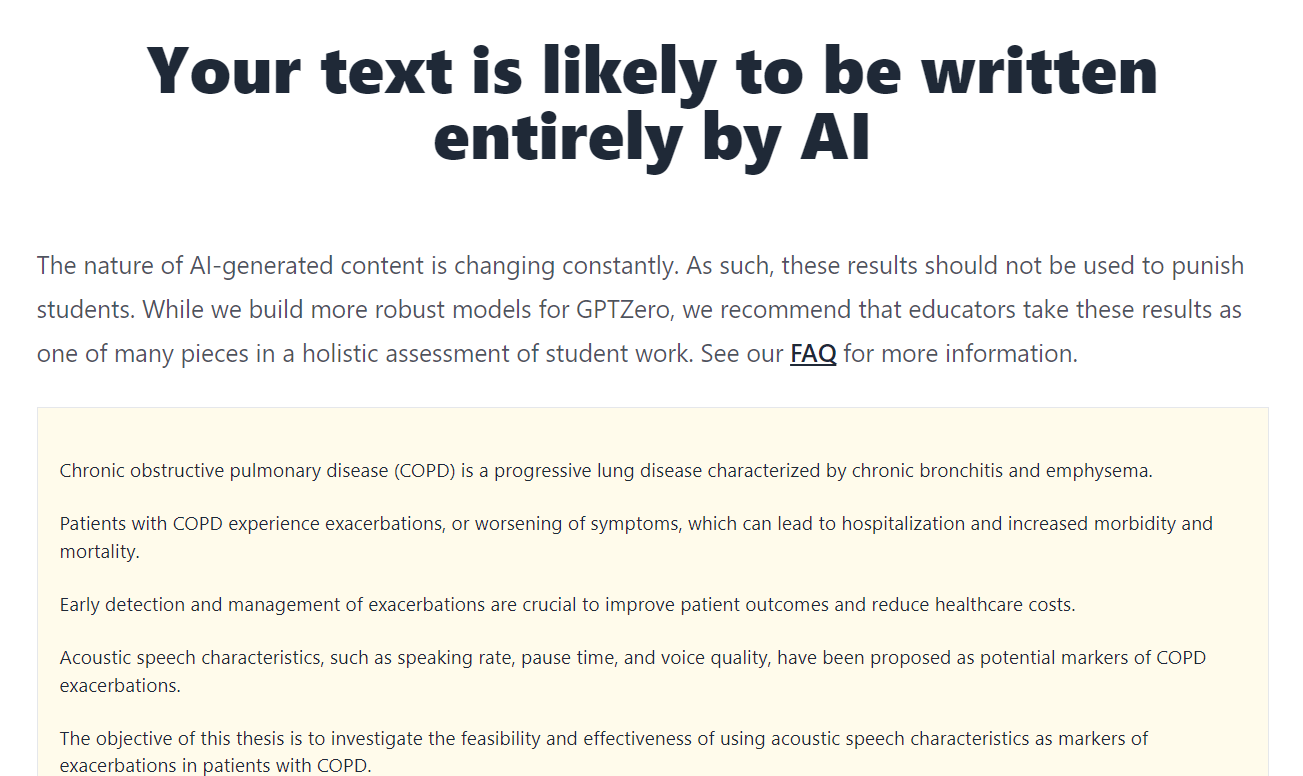

8. GPTZero

- Free

- Provides stats that other tools don’t

- Highlights text

- No sign-up required—just paste in text

- Below-average accuracy

- No percentage shown

- Only seems to give binary judgments

- One false positive

GPTZero is unusual in the way it presents its results. Instead of a percentage, it gives a sentence stating what it detected in your text (e.g., “Your text is likely to be written entirely by AI”). In our testing, it only ever said that a text was entirely AI or entirely human, suggesting it’s unable to detect mixed AI-and-human texts.

Because of these binary judgments, it got the relatively low accuracy score of 52%. The tool does also highlight text to label it AI, but again, we found that it only ever highlighted either the whole text or none of it.

Further stats—perplexity and burstiness—are shown, but these are not likely to be helpful to the average user, and it’s unclear how exactly they relate to the judgment. While GPTZero is straightforward to use, we found the information it provided to be inadequate and not very accurate.

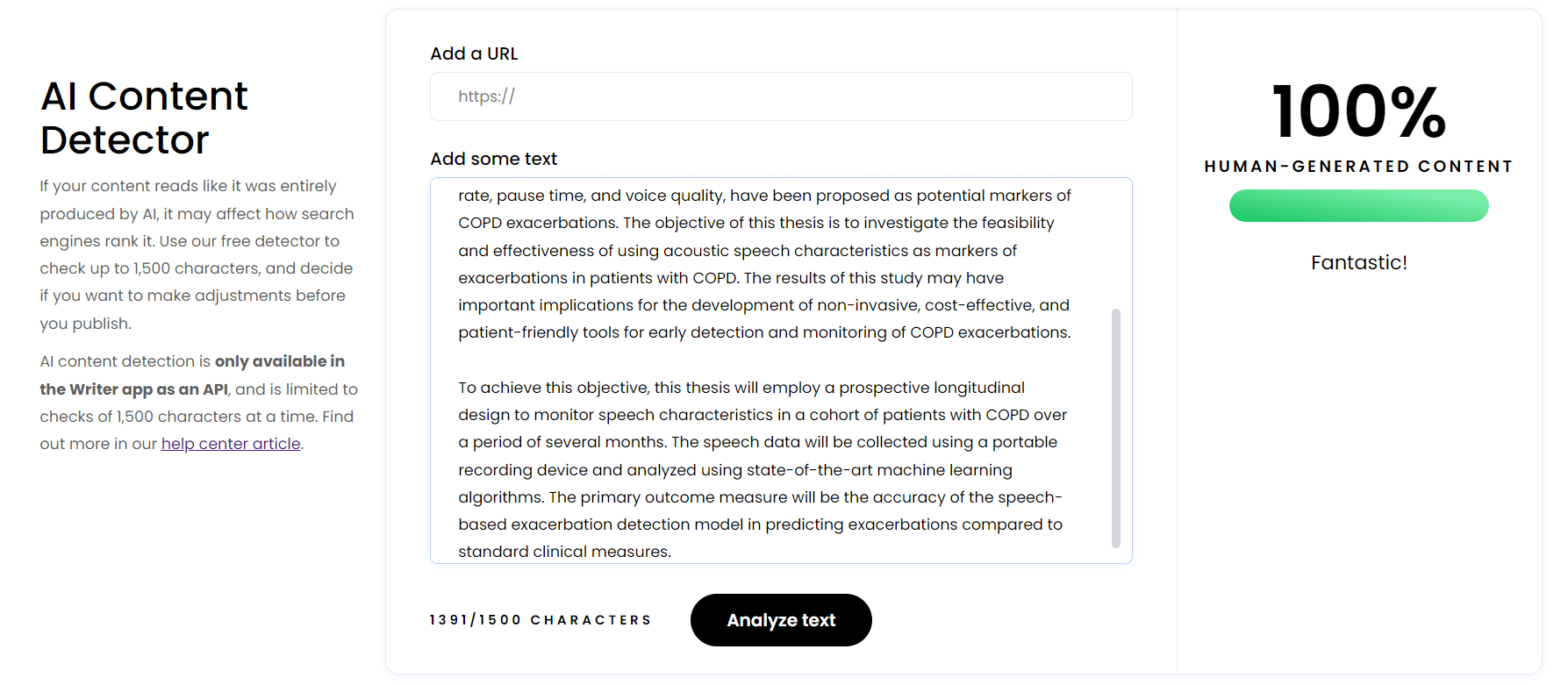

9. Writer

- Free

- No false positives

- No sign-up required—just paste in text

- Often fails to load results

- Very low accuracy

- Can’t detect GPT-4 texts at all

- Low character limit

- No text highlighting

The AI detector on Writer’s website didn’t work very well for us. On our first attempt, the results consistently failed to load, making the tool useless. When we tried again a few days later, results did usually load correctly, although they still failed every few checks, requiring a lot of retries.

When the tool was working, its results were still some of the least accurate we saw, at 38%. While it had no false positives, it detected none of the GPT-4 texts and only 70% of the GPT-3.5 texts. Its ability to detect paraphrased or mixed AI texts was the worst of all the tools we tested.

In terms of the information shown, Writer provides a percentage of “human-generated content” but no highlighting to indicate what content has been labeled AI. It also has a character limit of 1,500, the lowest of the tools we tested. We don’t recommend this tool.

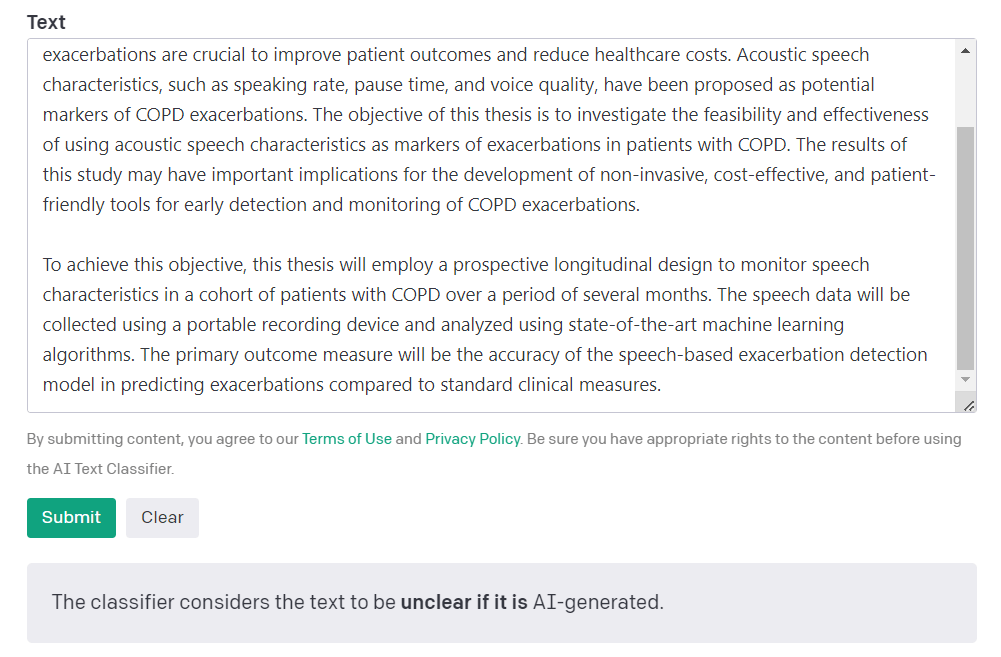

10. AI Text Classifier (OpenAI)

- Free

- Quick and straightforward to use

- Very low accuracy

- Very vague results, with no percentage or highlighting

- One false positive

- Sign-up required (same account as ChatGPT)

Although it was developed by OpenAI, the company behind ChatGPT itself, we found that the AI Text Classifier did not provide enough information to be useful. It doesn’t give a percentage or any kind of highlighting, just a statement that the text is very unlikely/unlikely/unclear if it is/possibly/likely AI-generated.

The overall accuracy of the tool was 38%, the same as that of Writer. However, unlike Writer, it did unfortunately have one false positive—a significant problem if you want to use the tool to assess student submissions, for example.

Though the AI Text Classifier is free, it’s necessary to sign up for an OpenAI account to use it. If you’ve already signed up for ChatGPT, then you can sign in with the same account. Regardless, we don’t recommend relying on this tool; the information provided is inadequate, and its accuracy is low.

Research methodology

To carry out this research, we first selected 10 AI detectors that currently show up prominently in search results. We looked mostly at free tools but also included two premium tools with reputations for high accuracy.

We tested all 10 tools with the same texts and the same scoring system for accuracy. The usability and pricing of the tools are discussed in the individual reviews but were not included in the scoring system, which is based purely on accuracy and the number of false positives.

Testing texts

In our testing, we used six categories of texts, with five texts in each category and therefore a total of 30 texts. Each text was between 1,000 and 1,500 characters long (AI detectors are usually inaccurate with texts any shorter than this). The categories were:

- Completely human-written texts

- Texts generated by GPT-3.5 (from ChatGPT)

- Texts generated by GPT-4 (from ChatGPT)

- Parts of the human-written texts, combined with GPT-3.5 text (from ChatGPT)

- The GPT-3.5 texts, but paraphrased by QuillBot

- The human-written texts, but paraphrased by QuillBot

The human-written texts were all on different topics—two quite technical specialist topics and three more general topics—and from different kinds of publication:

- A thesis introduction about chronic obstructive pulmonary disease

- An academic report about artificial intelligence

- A Wikipedia article about the French Revolution

- An online article about Romanticism

- An analysis article about gun control in the US

To make the comparison as fair as possible, all other texts were on the same five topics (e.g., we prompted ChatGPT to “Write a college essay about the French Revolution”). We used the same prompts for the GPT-3.5 and GPT-4 texts, and we used the same settings for all QuillBot paraphrasing (“Standard” mode, maximum number of synonyms).

You can see all the testing texts in the document below, including links to the sources of the human-written texts:

Accuracy scoring

For each scan, we gave one of the following scores:

- 1: Accurately labeled the text as AI or human (within 15% of the right answer)

- 0.5: Not entirely wrong, but not fully accurate (within 40% of the right answer)

- 0: Completely wrong (not within 40% of the right answer)

For example, if a text is 50% AI-generated, then a tool gets 1 for labeling it 55% AI, 0.5 for labeling it 27%, and 0 for labeling it 2% (or 98%). When a tool didn’t show a percentage, we converted the information it did give into a percentage (e.g., OpenAI’s “likely” label = 81–100%).

These scores were added up and turned into accuracy percentages. However, we excluded the paraphrased human-written texts from this score. So a tool that scored 1 for every text (excluding paraphrased human texts) would have a 100% accuracy score.

We excluded these texts because AI detectors are not really designed to detect paraphrasing tools, only purely AI-generated text. It’s interesting to investigate whether they can sometimes detect these tools anyway, but it’s not fair to include this in the score.

The scores indicated in the table at the start are:

- Accuracy (as defined above)

- False positives: How many of the five purely human-written texts are wrongly flagged as AI

- Star rating: The accuracy percentage, turned into a score out of 5, with 0.1 subtracted for each false positive

Frequently asked questions about AI detectors

- How accurate are AI detectors?

-

AI detectors aim to identify the presence of AI-generated text (e.g., from ChatGPT) in a piece of writing, but they can’t do so with complete accuracy. In our comparison of the best AI detectors, we found that the 10 tools we tested had an average accuracy of 60%. The best free tool had 68% accuracy, the best premium tool 84%.

Because of how AI detectors work, they can never guarantee 100% accuracy, and there is always at least a small risk of false positives (human text being marked as AI-generated). Therefore, these tools should not be relied upon to provide absolute proof that a text is or isn’t AI-generated. Rather, they can provide a good indication in combination with other evidence.

- How can I detect AI writing?

-

Tools called AI detectors are designed to label text as AI-generated or human. AI detectors work by looking for specific characteristics in the text, such as a low level of randomness in word choice and sentence length. These characteristics are typical of AI writing, allowing the detector to make a good guess at when text is AI-generated.

But these tools can’t guarantee 100% accuracy. Check out our comparison of the best AI detectors to learn more.

You can also manually watch for clues that a text is AI-generated—for example, a very different style from the writer’s usual voice or a generic, overly polite tone.

- Can I have ChatGPT write my paper?

-

No, it’s not a good idea to do so in general—first, because it’s normally considered plagiarism or academic dishonesty to represent someone else’s work as your own (even if that “someone” is an AI language model). Even if you cite ChatGPT, you’ll still be penalized unless this is specifically allowed by your university. Institutions may use AI detectors to enforce these rules.

Second, ChatGPT can recombine existing texts, but it cannot really generate new knowledge. And it lacks specialist knowledge of academic topics. Therefore, it is not possible to obtain original research results, and the text produced may contain factual errors.

However, you can usually still use ChatGPT for assignments in other ways, as a source of inspiration and feedback.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Caulfield, J. (2023, September 06). Best AI Detector | Free & Premium Tools Compared. Scribbr. Retrieved October 30, 2023, from https://www.scribbr.com/ai-tools/best-ai-detector/

1 comment

Jack Caulfield (Scribbr Team)

June 2, 2023 at 1:33 PMThanks for reading! Hope you found this article helpful. If anything is still unclear, or if you didn’t find what you were looking for here, leave a comment and we’ll see if we can help.